100

LNG

INDUSTRY

MARCH

2016

Industry tolerance

While the need for two infrastructures has admittedly

been a necessity in the past, it is probably most accurate

to say that users have ‘tolerated’ this situation rather than

‘preferred’ it. This is particularly true for IT departments

tasked with managing the computing, software, network,

and security infrastructures for a plurality of systems.

Furthermore, it is also true for the machinery engineers in a

typical plant, who must convince their IT departments that

they need a separate infrastructure that often consists of

different computers, operating systems, client applications,

firewalls, remote access environments, security models,

etc. Both stakeholders are inconvenienced in multiple

ways, and both would like a solution that is less expensive

to deploy and sustain by sharing, rather than duplicating,

infrastructure.

Convergence

There has long been an appetite to converge both process

and vibration data because the interaction between the

machine and the process surrounding it is inevitable.

Process conditions can adversely impact a machine, and

failures are often due to external influences rather than

normal wear and tear. For instance, in the case of pumps, it

might be process conditions that lead to cavitation; in the

case of compressors, it might be process conditions that

lead to a surge; and, in the case of a turbine generator, it

might be changing steam conditions that result in excessive

differential expansion and a mechanical rub. This means

that the ability to correlate process and vibration data is

frequently necessary when diagnosing problems in rotating

machinery – particularly critical machinery that is an

integral part of the process flow. Various cumbersome, and

usually expensive, methods of integrating machinery and

process data have existed for years, but often meant that

the data had to be replicated in both systems. This lead to

the inevitable ‘two versions of the truth’ that never agreed

precisely with one another. Until recently, the technology

had simply not advanced sufficiently to enable convergence

of these types of disparate data into a single repository. As

a result, separate repositories could share data with one

another, but were still just that – separate repositories.

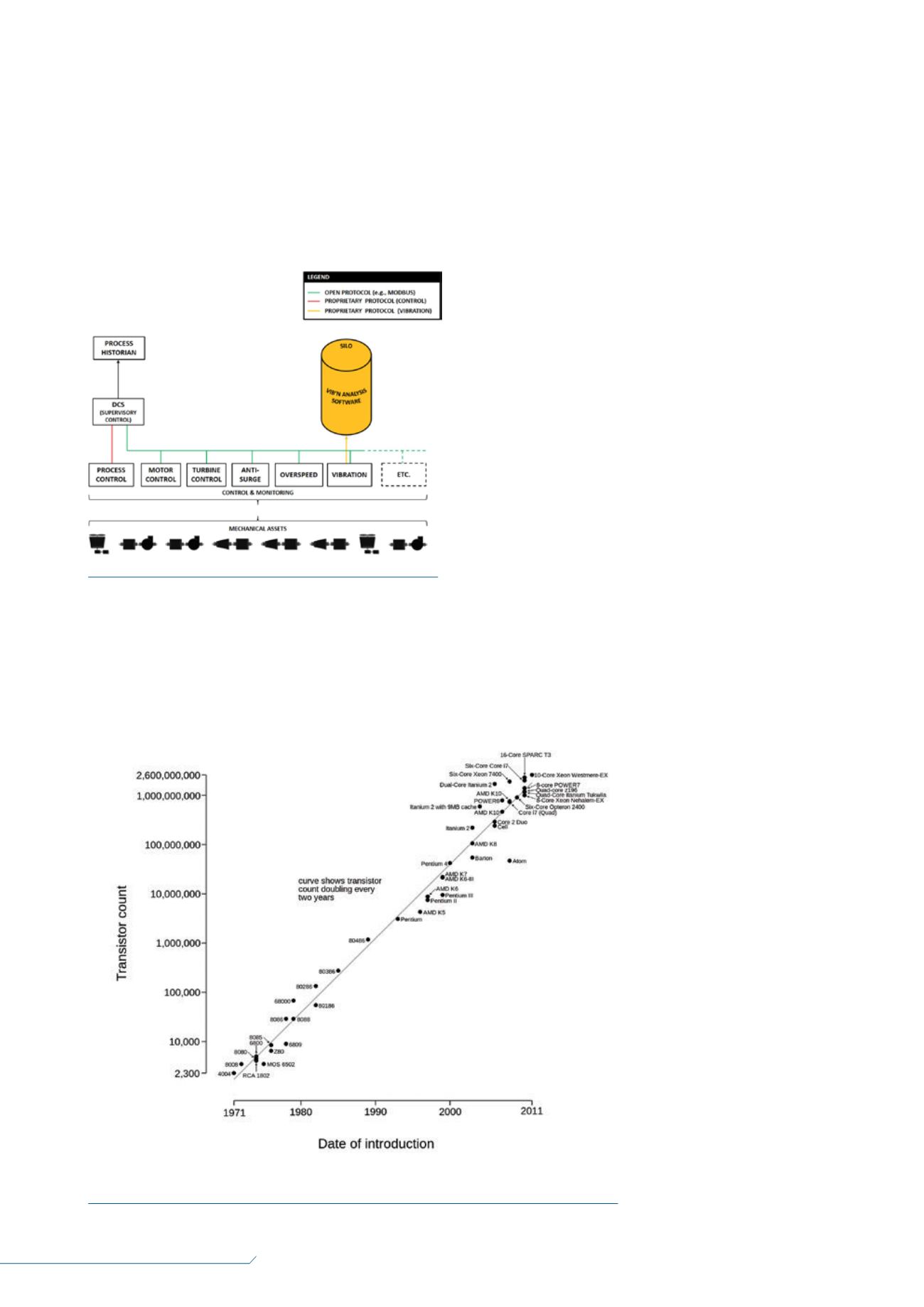

Meanwhile, technology has

progressed at a staggering rate

along the familiar Moore’s Law

trajectory (Figure 2). Indeed, as

process data historians have

pushed the envelope of data

collection speeds, they now

easily surpass the

1 million tag/sec. mark and

continue to climb even higher,

with speed constraints primarily

imposed by the ability of hard

drives to write to their platters.

The advent of affordable

solid-state drives and raid

arrays has circumvented even

this constraint, and the speed

limit is climbing ever higher.

100 000 x 10 =

1000 x 1000

Process historians evolved

along a path where many

thousands of points needed to

be scanned, but at relatively

slow rates. Update rates of

1 sec. for process data are often

considered to be extremely

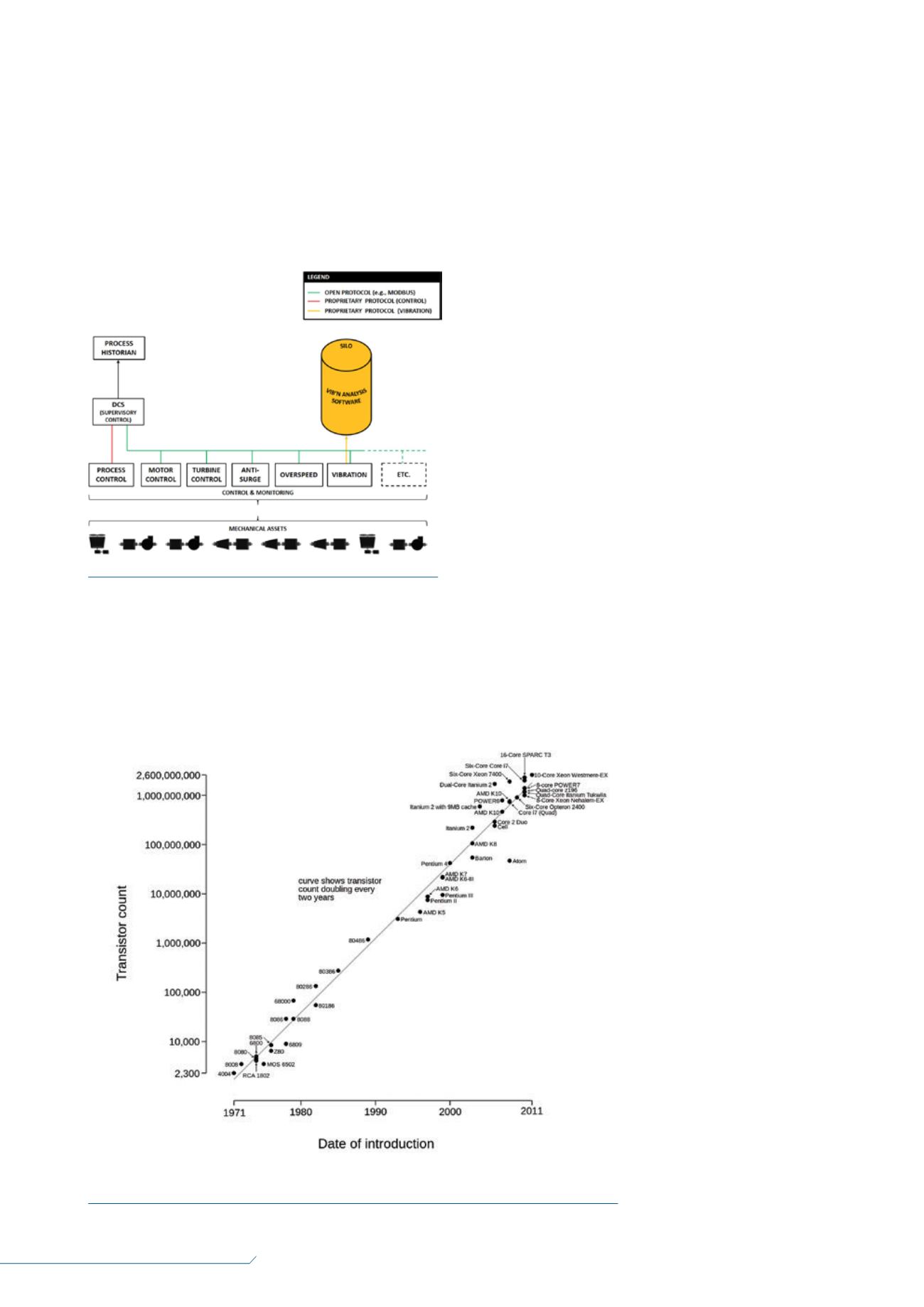

Figure 1.

Most data in the plant flows from purpose-built

controllers/monitors into the Distributed Control System (DCS),

and then into the process historian. However, the special needs of

vibration analysis software have historically required a stand-alone

‘silo’ that uses its own infrastructure, separate from the process

historian.

Figure 2.

Microprocessor transistor counts 1971 – 2011 and Moore’s Law.